Intuition behind self-attention

In this post I want to share the intuition around a core mechanism in modern AI models: an algorithm called self-attention.

The algorithm is only a tiny part of any given AI model, but it is striking that most of state of the art AI uses it (as of 2024).

This post doesn't require technical knowledge, but if you have technical knowledge you might still find the intuition helpful!

The intuition: select important parts of the original content

You can think of self-attention as an algorithm that creates a summary of some information that is passed to a model. This information can be anything - images, text, sounds, numbers.

The algoritm is clever in that the summary is not created from scratch: it's simply reusing the most important passages of the original content it's summarising. This is done by assigning weights to parts of the original content according to how important they are.

Example: I could summarize the paragraph above by using the words in bold, rather than creating a summary that uses different words:

"self-attention creates a summary of some information reusing important passages of the original content it's summarising"

The cleverness here is that this approach allows the algorithm to work without ongoing manual supervision.

How are important parts selected

The way importance is assigned to certain parts of the information is quite simple: the algorithm selects bits of information that are the most representative of the all source content.

Let's imagine we are applying self-attention to some written content: the most representative words in a sentence can simply words that are most similar with others around it, being those best suited at distilling the core meaning behind a sentence.

Example: in the sentence

"Cats and dogs, like all pets, like cuddles"

the word pet represents well both cats and dogs, which are hence redundant.

We could then summarise the sentence above as "pets like cuddles".

This is clearly a very rough approximation of what a real summary would look like - but it works like a charm!

Why it's a summary helpful

A summary essentially highlights key pieces of information and discards the rest. This makes it easier for a model to find important information out of large amount of data.

Interestingly this is the same philosophy behind search algorithms and the page rank algorithm, which has been an important of Google Search.

Read more

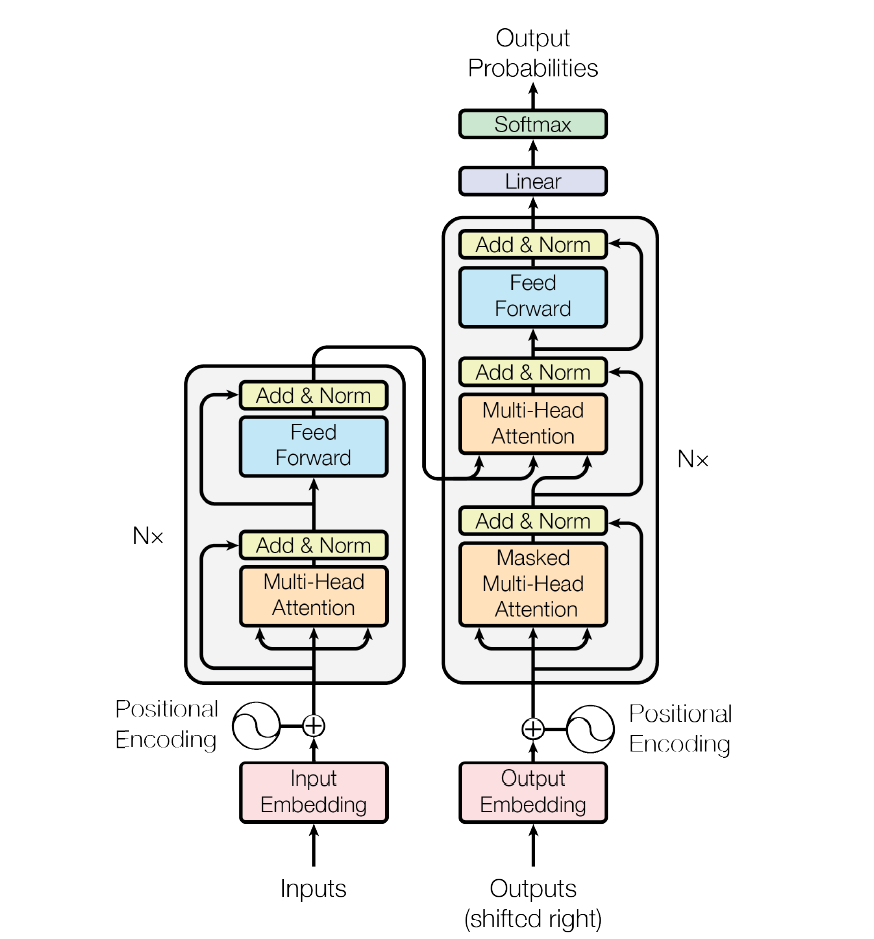

There's plenty of information, videos and material on the web for those that want to dig deeper. Here I will just point you to the key original paper on attention: Attention is all you need

If you have any thoughts on this, I am keen to hear them - leave a comment!

Best,

Andrea